This post is part of a homelab series I'll be rolling out through the next few weeks. I have two custom servers I've built for my homelab, one of which has a Nvidia P2000 that I would like to use inside a Ubuntu VM.

In order to use a PCIe device inside your VM, you need do a little magic inside your Proxmox host.

Host Config (Proxmox)

- To start, we need to enable IOMMU on the Promxox host. With your editor of choice, open up

/etc/default/grub, and comment out the#GRUB_CMDLINE_LINUX_DEFAULT="quiet", replace withGRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on". If you are using an intel cpu, then useintel_iommu=oninstead.

# /etc/default/grub

...

GRUB_DEFAULT=0

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR="Proxmox Virtual Environment"

#GRUB_CMDLINE_LINUX_DEFAULT="quiet"

GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on pcie_acs_override=downstream,multifunction video=efifb:eek:ff"

GRUB_CMDLINE_LINUX="root=ZFS=rpool/ROOT/pve-1 boot=zfs"

...

Save the file and then run update-grub

Verify that IOMMU is enabled, reboot then run:

dmesg | grep -e DMAR -e IOMMU

- Add the following lines to

/etc/modules, save then exit.

# /etc/modules

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfd

- This next step assumes you have created a VM inside proxmox already. If you haven't done so yet, go ahead. Default bios is fine for linux, if you are setting up a windows virtual machine, set the bios to

ovmf. Once finished, shut down the vm and edit it's conf file. I'm addingq35as the machine type, and some cpu configurations.

# /etc/pve/qemu-server/100.conf

# Replace 100.conf with the ID of your VM.

...

cpu: host,hidden=1,flags=+pcid

machine: q35

...

- This next step is little bit of a pain, but basically it allows us to properly identify our GPU so that we can add it in the VM GUI config area.

lspci -v to print out a list of hardware. It will be lengthy, but identify your GPU. Somewhere in that list, you should find your GPU. Here's what my output looks like:

...

2d:00.0 VGA compatible controller: NVIDIA Corporation GP106GL (rev a1) (prog-if 00 [VGA controller])

Subsystem: NVIDIA Corporation GP106GL [Quadro P2000]

Flags: bus master, fast devsel, latency 0, IRQ 148

Memory at fb000000 (32-bit, non-prefetchable) [size=16M]

Memory at d0000000 (64-bit, prefetchable) [size=256M]

Memory at e0000000 (64-bit, prefetchable) [size=32M]

I/O ports at f000 [size=128]

Expansion ROM at 000c0000 [disabled] [size=128K]

Capabilities: [60] Power Management version 3

Capabilities: [68] MSI: Enable- Count=1/1 Maskable- 64bit+

Capabilities: [78] Express Legacy Endpoint, MSI 00

Capabilities: [100] Virtual Channel

Capabilities: [128] Power Budgeting <?>

Capabilities: [420] Advanced Error Reporting

Capabilities: [600] Vendor Specific Information: ID=0001 Rev=1 Len=024 <?>

Capabilities: [900] #19

Kernel driver in use: vfio-pci

Kernel modules: nvidiafb, nouveau

...

Now that we've found it, lets get more info on it by running lspci -n -s 2d:00

2d:00.0 0300: 10de:1c30 (rev a1)

2d:00.1 0403: 10de:10f1 (rev a1)

Great, so now we just want to add the pci ID's to the vfio.conf file. The ID's in my case are 10de:1c30 and 10de:10f1.

Now let's just add it to the vfio.conf file:

echo "options vfio-pci ids=10de:1c30,10de:10f1" > /etc/modprobe.d/vfio.conf

- We need to add some lines to the proxmox's blacklist conf file.

# /etc/modprobe.d/pve-blacklist.conf

blacklist nvidiafb

blacklist nvidia

blacklist radeon

blacklist nouveau

Save then exit. This would also be a good time to restart your proxmox host.

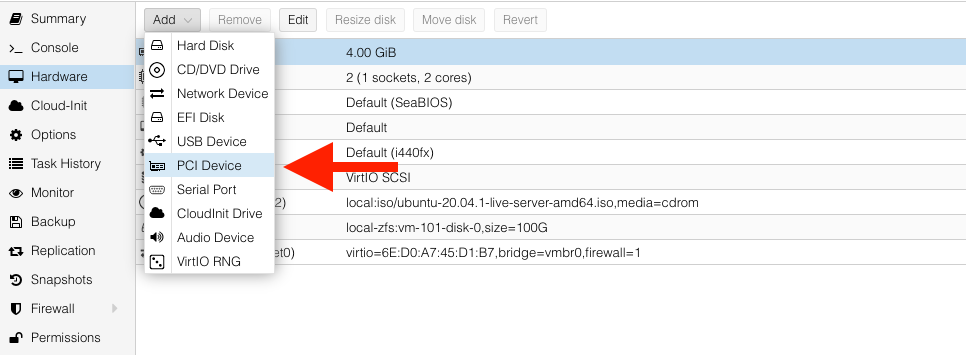

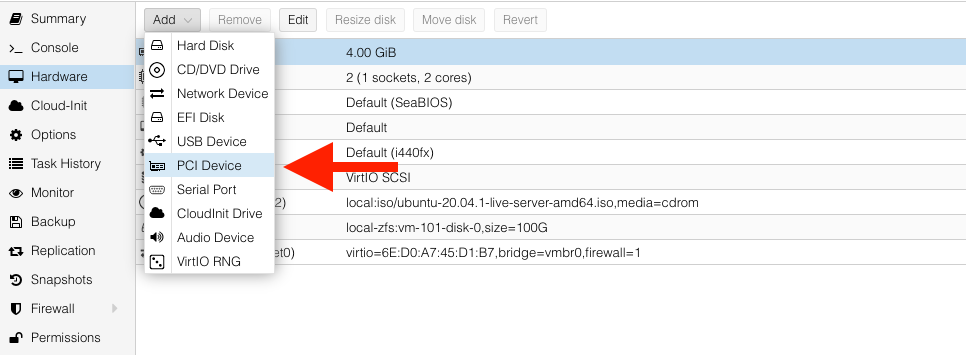

- Now we can finally add our GPU to our virtual machine. Under the hardware settings area, add a new PCI device:

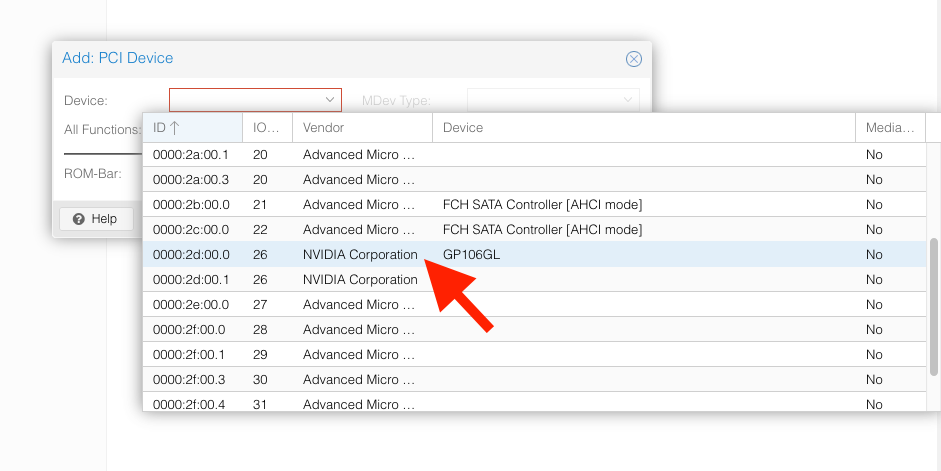

A list will appear, find and select your GPU from the list:

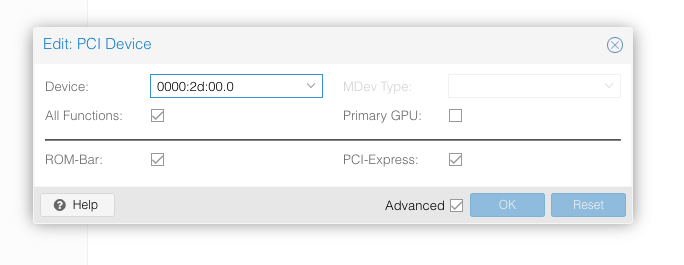

Be sure to enable All Functions, Rom-Bar, and PCI-Express:

If you Also turn on Primary GPU, then you won't be able to console into the virtual machine through the proxmox host (you can still ssh into it!). Click 'ok' and then start up your VM!

If you Also turn on Primary GPU, then you won't be able to console into the virtual machine through the proxmox host (you can still ssh into it!). Click 'ok' and then start up your VM!

The last step is to ssh into the virtual machine, and check that our GPU is being recognized.

Run the following command. If all things went well, then you should be able to identify your GPU in the list:

lshw -c video

*-display

description: VGA compatible controller

product: GP106GL [Quadro P2000]

vendor: NVIDIA Corporation

physical id: 0

bus info: pci@0000:01:00.0

version: a1

width: 64 bits

clock: 33MHz

capabilities: pm msi pciexpress vga_controller bus_master cap_list rom

If you see your graphics card in the output, congrats! The next step would be installing drivers, but I'll save that for another post.

Cheers!